GPT-3

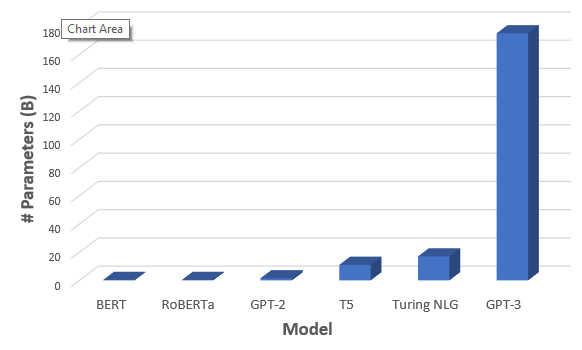

Language models are growing exponentially. They are approaching human performance levels.

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like texts. GPT-3 has a capacity of 175 billion machine learning parameters.

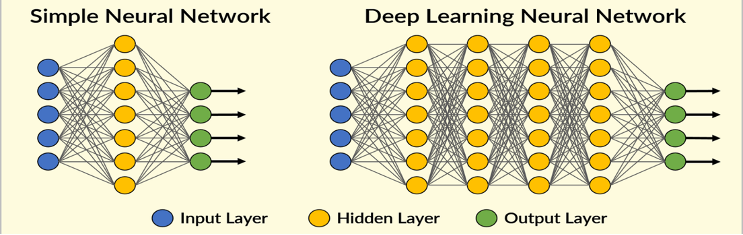

You might find yourself asking why the number of parameters in a model matters. Having a stronger neural network means that the model can run more calculations to ultimately produce more accurate output. So imagine 175 billion machine learning parameters:

This model offers extreme performance for real-time text decoding by a neural network.

For more information on GPT-3, please visit the OpenAI website.

Comparison of number of parameters of recent popular pre trained NLP models, GPT-3 clearly stands out :